In today’s interconnected digital landscape, cloud infrastructure has become essential for businesses of all sizes. Leveraging the power of the cloud offers unparalleled scalability, flexibility, and cost-effectiveness. However, this reliance on cloud computing also introduces significant security risks and the critical need for comprehensive monitoring. Understanding how to effectively monitor and secure your cloud infrastructure is paramount to protecting your valuable data, maintaining business continuity, and ensuring compliance with industry regulations. This article will provide practical guidance on implementing robust cloud security measures and establishing effective monitoring strategies to safeguard your cloud environment.

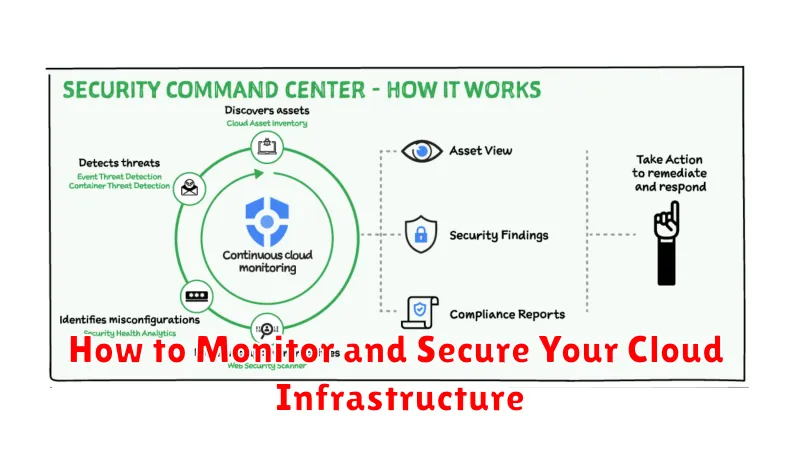

Effectively monitoring your cloud infrastructure allows you to identify potential vulnerabilities, detect anomalies, and respond to security incidents promptly. Implementing robust security protocols, including access control, encryption, and intrusion detection systems, is crucial for mitigating risks and maintaining the integrity of your cloud environment. From understanding the shared responsibility model to utilizing advanced cloud security tools, this article will equip you with the knowledge and strategies necessary to confidently secure and monitor your cloud infrastructure and protect your organization from evolving cyber threats.

Why Cloud Monitoring Is Essential

Cloud monitoring is crucial for maintaining the performance, security, and availability of your cloud infrastructure. It provides real-time visibility into the health and status of your systems, allowing you to proactively identify and address potential issues before they impact your users.

Early problem detection is a key benefit. Monitoring tools can alert you to unusual activity, resource bottlenecks, or performance degradation, enabling swift intervention and minimizing downtime. This proactive approach helps maintain service level agreements (SLAs) and ensures a positive user experience.

Furthermore, cloud monitoring plays a vital role in cost optimization. By tracking resource utilization, you can identify areas of overspending and right-size your cloud resources. This can lead to significant cost savings and improved efficiency.

Security is another critical aspect. Monitoring tools can detect suspicious activities, unauthorized access attempts, and other security threats. This real-time visibility enables you to respond quickly and mitigate potential breaches, protecting your sensitive data and maintaining the integrity of your systems.

Key Metrics You Should Track

Effectively monitoring your cloud infrastructure requires tracking key metrics that provide insights into performance, security, and cost. These metrics should be tailored to your specific needs and the services you utilize. However, some fundamental metrics are universally valuable.

Compute resources such as CPU utilization, memory usage, and disk I/O should be monitored to ensure optimal performance and identify potential bottlenecks. High CPU utilization, for instance, may indicate the need for vertical scaling or load balancing.

Network performance is crucial for application availability and user experience. Metrics like latency, throughput, and packet loss can reveal network congestion or connectivity issues. Monitoring these metrics helps ensure smooth data flow and optimal application performance.

Security metrics are essential for identifying and mitigating threats. Track metrics such as login attempts, security group changes, and data access patterns to detect anomalies and potential breaches. Regularly reviewing these metrics helps maintain a secure cloud environment.

Finally, keeping tabs on cost metrics is essential for managing cloud expenditure. Monitor your spending against budget, track cost per resource, and identify areas for potential optimization. This helps ensure cost-efficiency within your cloud infrastructure.

Security Best Practices for Cloud Servers

Securing your cloud servers is paramount to protecting your data and applications. Implementing robust security measures minimizes vulnerabilities and potential threats. One fundamental practice is employing strong passwords and enabling multi-factor authentication (MFA) for all user accounts. This adds an extra layer of security, making it significantly harder for unauthorized access.

Regularly updating your server’s operating system and software is crucial. These updates often include security patches that address known vulnerabilities. Automated patching can streamline this process and ensure timely updates.

Firewall configuration is another critical aspect of server security. Properly configuring firewalls allows you to control incoming and outgoing network traffic, blocking unauthorized access attempts. Utilize both network-level and host-based firewalls for comprehensive protection.

Implement least privilege access. Grant users only the necessary permissions required to perform their tasks. This limits the potential damage from compromised accounts. Regularly audit user permissions and revoke any unnecessary access.

Data encryption is vital for protecting sensitive information. Encrypt data both in transit and at rest. Utilize encryption protocols like TLS/SSL for data in transit and encryption solutions for data stored on your servers.

Using Tools Like CloudWatch, Datadog, or New Relic

Monitoring your cloud infrastructure is crucial for maintaining performance, availability, and security. Leveraging tools like CloudWatch, Datadog, or New Relic can significantly streamline this process. These tools provide comprehensive visibility into your cloud environment, enabling you to track key metrics and identify potential issues proactively.

CloudWatch, integrated with AWS, offers a robust suite of monitoring services for EC2, S3, and other AWS resources. It provides metrics, logs, and alarms, enabling you to monitor resource utilization, application performance, and operational health.

Datadog is a comprehensive monitoring platform for cloud-scale applications, infrastructure, and logs. It supports a wide range of integrations, allowing you to monitor various cloud providers and technologies from a single platform. Its customizable dashboards and alerting system enable effective incident management.

New Relic provides real-time insights into your application performance, infrastructure health, and user experience. It offers detailed application profiling, transaction tracing, and error tracking capabilities. This detailed performance analysis aids in optimizing application performance and identifying bottlenecks.

Choosing the right tool depends on your specific requirements and existing infrastructure. Consider factors like cost, integration capabilities, and the level of detail needed for effective monitoring.

Access Controls and Role Management

Access controls form the cornerstone of cloud security. They dictate who can access what resources and what actions they can perform. Implementing the principle of least privilege is crucial. This means granting users only the permissions necessary to perform their job functions, minimizing the potential damage from compromised accounts. Regularly review and revoke unnecessary access to maintain a secure posture.

Role-based access control (RBAC) simplifies management by grouping permissions into roles. Instead of assigning individual permissions to each user, you assign roles that encompass the required permissions. This simplifies administration, especially in environments with many users and resources. Utilize pre-defined roles whenever possible and create custom roles only when necessary.

Centralized identity management further strengthens security by providing a single point of control for user access across various cloud services. This simplifies user onboarding and offboarding, strengthens password policies, and enables multi-factor authentication (MFA) enforcement.

Regularly auditing access controls is paramount. Review user permissions, role assignments, and activity logs to identify anomalies and potential security breaches. Automated tools can help streamline this process and alert you to suspicious activities.

Backup and Disaster Recovery Planning

Backup and Disaster Recovery (BDR) planning is crucial for maintaining business continuity in the face of unforeseen events impacting your cloud infrastructure. A well-defined BDR plan ensures minimal downtime and data loss, allowing for swift recovery.

Your BDR plan should outline regular backup procedures, including the frequency of backups (e.g., daily, weekly), the data retention policy, and the backup storage location. Consider using the 3-2-1 backup strategy: 3 copies of your data, on 2 different media types, with 1 copy stored offsite.

The plan should also detail the disaster recovery process, including the steps for restoring data and applications, the designated recovery time objective (RTO), and the recovery point objective (RPO). Regular testing of your BDR plan is essential to validate its effectiveness and identify potential weaknesses.

Data replication and geo-redundancy are important considerations. Replicating data across multiple availability zones or regions can minimize the impact of outages. Geo-redundancy ensures data availability even in the event of a regional disaster.

Setting Up Alerts and Automated Responses

Alerts are crucial for proactive monitoring. Configure alerts based on key metrics such as CPU usage, memory consumption, disk space, and network traffic. Set thresholds that trigger notifications when these metrics exceed acceptable limits. This allows you to address potential issues before they escalate.

Automated responses can significantly enhance your security posture. Consider automating tasks like restarting unresponsive services, scaling resources based on demand, or isolating compromised instances. These pre-defined actions help mitigate threats quickly and efficiently.

Implement a notification system that delivers alerts through various channels like email, SMS, or dedicated monitoring dashboards. Ensure the notification system integrates with your existing workflow to minimize response time.

Regularly review and refine your alert thresholds and automated responses. As your infrastructure evolves, adjustments may be needed to ensure optimal performance and security.